News Story

Paper on Build It, Break It, Fix It Competition Sheds Light on Building Secure Software

After running a unique cybersecurity contest three times in 2015, competition organizers from the Maryland Cybersecurity Center (MC2) say they have uncovered some interesting results about how to successfully build secure software and break insecure software.

The results of their research, recently published in a paper, "Build It, Break It, Fix It: Contesting Secure Development," has also influenced the latest iteration of the competition, which kicks off today.

Build It, Break it, Fix It was first launched in 2014, with the goal of teaching college students the latest skills in writing, implementing and de-bugging software programs by challenging them to not only hack into a competitor’s system, but also build a computing platform that can fend off attacks.

During the Build It round, builders write software for a system specified by contest organizers. In the Break It round, breakers (hackers) find flaws in the programs submitted by other teams. During the Fix It round, the builders attempt to patch any vulnerabilities in their software that was hacked by the breaker teams.

Teams can compete in either the Build It or Break It category—or they can choose to enter in both.

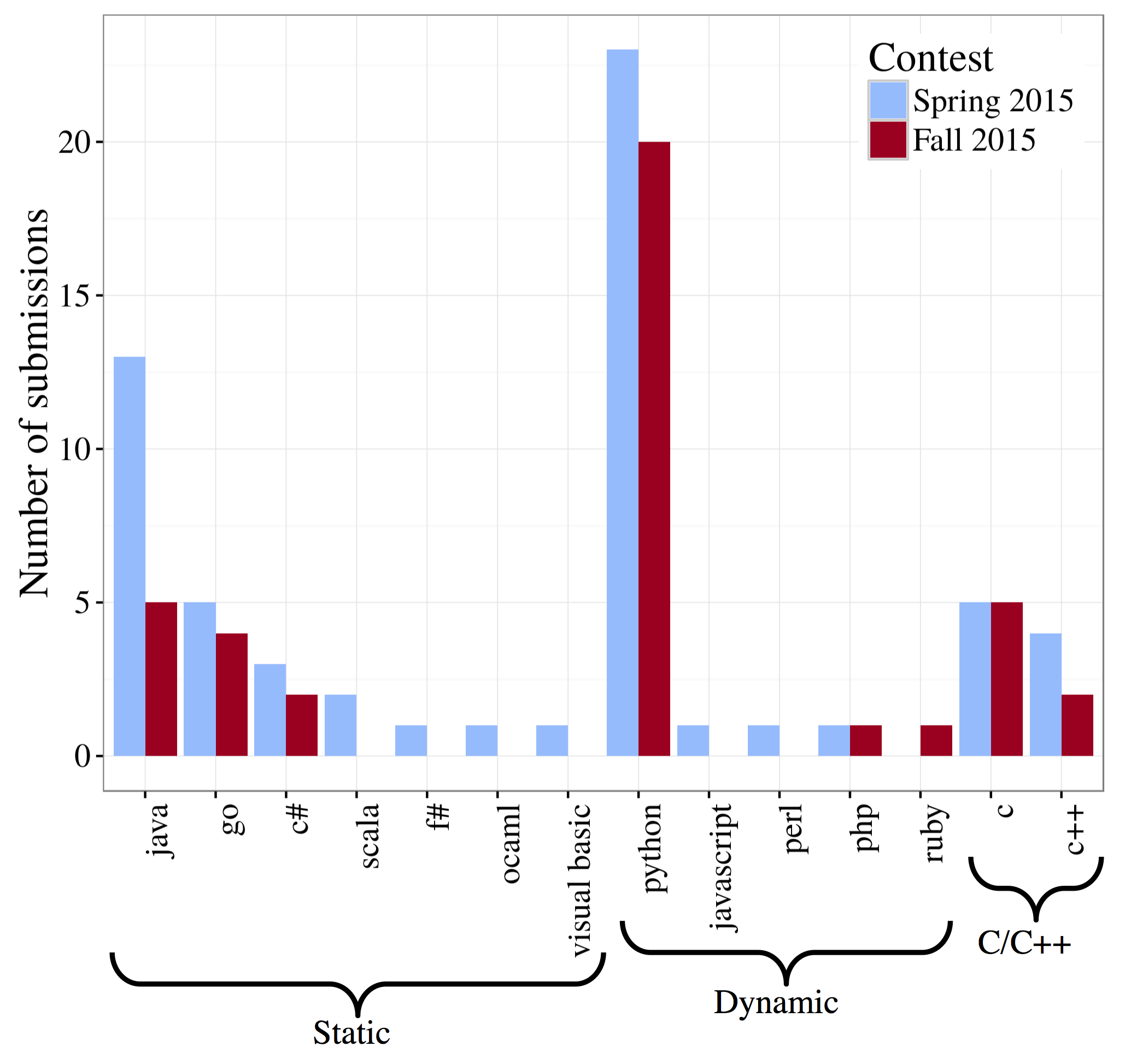

Looking at 116 teams faced with two programming problems, the paper’s authors say a quantitative analysis found that the most efficient Build It submissions used the C/C++programming language, but submissions coded in other statically typed languages were less likely to have a security flaw.

"At some level, this confirms experts’ conventional wisdom that modern 'statically typed' languages are safer," says Michael Hicks, a professor of computer science in MC2 who conceived the competition, "The nice thing is that this is an empirical result: We observed that when these languages were used in the competition and faced off head-to-head, C/C++ programs were less secure but ran faster."

They found that Build It teams with diverse programming-language knowledge also produced more secure code and that shorter programs correlated with better scores.

He says one particularly interesting fact their research uncovered is that Break It teams that were also successful Build It teams were significantly better at finding security bugs.

"The security industry today often imagines ‘farming out’ the task of making sure a program is secure to separate experts, who are independent of those who constructed a system," Hicks says. "Our results suggest that this may not be the most effective approach: Those who build a system have insights into how a system may break that those who haven't built it may not have."

Due to the success and value of the competition, Hicks has spoken with faculty at the University of Maryland and other universities—such as Princeton and Carnegie Mellon—about making the contest infrastructure freely available. The paper will also be presented at next month's ACM Conference on Computer and Communications Security in Vienna, Austria.

For the first time this year, Build It, Break It, Fix It is open to undergraduate or graduate students enrolled at any accredited university worldwide, not just U.S. students.

More than 250 university teams are registered to compete this year—making this year’s group of potential participants larger than in previous years, Hicks says. Forty-one teams are from the University of Maryland.

The prize pool for this year’s competition, which will wrap up October 20, will be up to $13,500 and shared amongst the winning teams. In prior contests, only the top two teams received cash prizes, but this time the prize money will be split between the top three teams and teams that place in honorable mention categories.

Hicks says he hopes this change in how prizes will be awarded along with a switch up in scoring for the final round will encourage more teams to see the competition through to the end.

Michelle Mazurek, a co-author on the paper and an assistant professor of computer science with joint appointments in MC2 and the Human-Computer Interaction Lab, says she is excited to see what this year’s competition will bring.

She notes the contest is valuable for students and researchers for a few reasons.

"It's valuable for the participants because they get to practice thinking about and implementing security from both an attacker and a defender's perspective," she says. "It's valuable for us as researchers because it can be difficult to test ideas about secure development meaningfully. The contest allows us to examine these ideas in an environment that is partially controlled but also realistic, which provides useful, high-quality data that is otherwise hard to get—better data means better insights for how to help developers end up with more secure code."

Funding for the contest infrastructure is provided by the National Science Foundation. Sponsors include MC2, Booz Allen Hamilton, Leidos, AsTech Consulting, and Trail of Bits.

—Story by Melissa Brachfeld